Playtomic's Pipeline

Your continuous integration (CI) pipeline is one of the most important pieces of software you can have in your business: it runs every time a change in your code is made.

At the backend team of Playtomic, we are quite proud of our CI pipeline. At least, I am :) It not only manages the integration of dozens of branches every day, but the deployments to our development and productions environment. It is currently handling around 35 projects and it is totally unsupervised. Every change to develop branch goes directly to development environment. Every change to master goes directly to production.

Ruben told us how Playtomic’s stack looks like. In this post, I want to share some hints about what we were thinking when building our pipeline. It is not very exhaustive, but I think it is a good approximation.

# How does a pipeline must be? When building a pipeline there are several points you have to care about. It has to be:

- Fast: Building, testing and deployment must be fast. How much is fast? 1 minute? 2 minutes? It depends on your requirements, but being below 2 minutes is enough for our teams.

- Reliable: several simultaneous builds must not interfiere among each other (i.e.: random ports for testing).

- Replicable: builds on the same commit must produce the same artefact.

Pushes to develop and master are always performed after code review (we use github’s pull-requests). More:

- Continuos Deployment: a push to develop goes directly to develop environment. A push to master goes to production. No questions.

- Reduces the drifting among develop and production: several minor changes are safer to deploy to pro than fewer major changes.

- Easy to know which version is deployed where: if the build is green, then it is deployed. build includes commit hash. Important in a microservices architecture.

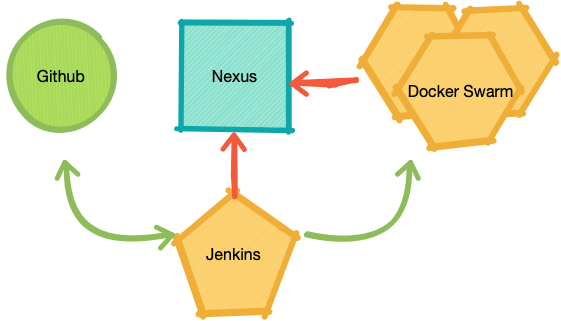

Software components:

These are the components that form our pipeline:

These are the components that form our pipeline:

- A git repo: github

- A CI system: Jenkins

- An artefact store: nexus

- An application container: docker swarm

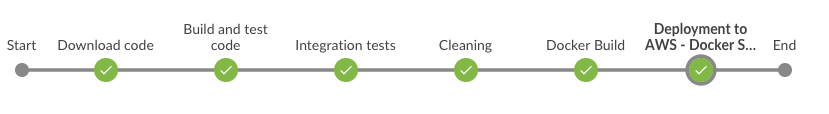

Pipeline steps:

- Download code (obviously!)

- Validation (master: does not accept snapshots)

- Build + unit tests

- Integration tests

- Docker build

- Docker push

- Docker deploy

- Clean (be nice)

Some snippets. I am skipping the content of the config class and sendNotificationOk(), but I think it is still clear.

Download code (java node):

stage('Download code') {

deleteDir()

checkout scm

env.COMMIT_HASH = lastLocalCommitHash()

env.POM_VERSION = mavenVersion()

}Validation (java node):

if (config.isMasterBranch(env.BRANCH_NAME) || (env.CHANGE_ID && config.isMasterBranch(pullRequest.base))) {

stage('Validating project') {

sh "mvn validate -Denforcer.skip=false"

}

}Build + unit tests (java node):

stage('Build and test code') {

sh "mvn clean package -DskipITs"

sendNotificationOk(config, 'Build and unit tests: OK')

}Integration tests (java node):

stage('Integration tests') {

sh "mvn verify"

}

sendNotificationOk(config, 'Integration tests: OK')Docker build (docker node):

stage('Docker Build') {

// docker-compose.yml uses DOCKER_REPOSITORY and SERVICE_VERSION to tag the image of the service.

sh "DOCKER_REPOSITORY=${dockerRepository} SERVICE_VERSION=${version} docker-compose -f docker-deploy/docker-compose.yml build"

}Docker deploy (docker node):

stage("Deployment to AWS - Docker Swarm ${environment}") {

DOMAIN = config.getPublicDomain()

// no suffix for production

if (environment != "pro") {

DOMAIN = DOMAIN + "-${environment}"

}

// default for develop and features environments

REPLICAS = 1

// This is a ElasticIP

DOCKER_HOST = config.getDevelopDockerHost()

DOCKER_CERT_PATH = config.getDevelopDockerCert()

profile = environment

switch(environment) {

case "develop":

break

case "pro":

profile = "amazon-pro"

REPLICAS = 2

DOCKER_HOST = config.getProDockerHost()

DOCKER_CERT_PATH = config.getProDockerCert()

break

}

DOCKER_REPOSITORY = dockerRepository

SERVICE_VERSION = version

// Remember that:

// 1. We need docker login to access docker repository

// 2. We need --with-auth-registry to pass these docker credentials to the docker host (in order that it can access to docker repository)

// 3. If we launch docker deploy in the remote machine, how does it access to the docker repo?

// 4. Conclusion: we are launching a ssh tunnel, and launching the docker deploy in this machine.

// See: https://unix.stackexchange.com/questions/83806/how-to-kill-ssh-session-that-was-started-with-the-f-option-run-in-background

// -L opens a ssh tunnel. -f goes to the background.

// This command opens the tunnel for 5 secs, but tunnel is not killed while it is used.

// So that, immediately after that we launch docker deploy which uses

sh "ssh -i ${DOCKER_CERT_PATH}/cert.pem -f -L localhost:2374:/var/run/docker.sock docker@${DOCKER_HOST} sleep 5; DOCKER_REPOSITORY=${dockerRepository} SERVICE_VERSION=${version} SPRING_PROFILES_ACTIVE=${profile} JAVA_ENABLE_DEBUG=${debug} REPLICAS=${REPLICAS} docker -Hlocalhost:2374 stack deploy ${DOMAIN} --with-registry-auth -c docker-deploy/docker-compose.yml"

sendNotificationOk(config, "Deployed to ${DOMAIN}")

}Clean (docker node):

dir('docker-deploy') {

sh "rm -rf target/"

}Out of this post:

- Different pipelines for libraries and services.

- libraries: develop = SNAPSHOT; master = RELEASE.

- Auth: How to auth jenkins with github, nexus and docker swarm. Auth between jenkins and swarm is outlined in deploy step.

Tips:

Make your commands explicits, that is, specify all environment variables with the command. This way you can replay them in your machine if something goes wrong by looking for the command in the log.

Next steps:

Some stuff that we want to incorporate or improve:

- System tests: set up a test environment in docker swarm.

- Quality gates: best implemented as pr-checks than in pipeline. If implemented in pipeline, don’t do in master (build would be broken after merge).

- Multistage docker as pipeline (pros: no specific jenkins nodes required. cons: slower: dependencies have to be downloaded every build.